Introduction

It’s important to keep up with the latest advancements in data processing to maintain system efficiency and reliability. Kafka is a key part of many data systems and needs careful handling during upgrades. In this article, we’ll look at upgrading Kafka from version 2.5.0 to 3.6.2, covering key steps, challenges, and strategies for a successful upgrade at Brevo.

Problem Statement

In 2021, we started using Kafka as our messaging platform. We focused on scaling to handle billions of messages and onboarding new applications to Kafka, but we neglected Kafka’s release lifecycle maintenance. This caused production incidents with version 2.5.0 as we scaled, leading to several major issues.

- Outdated versions made it difficult to roll out updates directly, requiring careful adjustments to numerous settings.

- The protocol version with 2.5 was outdated.

- Stability issues arose with Zookeeper, and brokers occasionally became unreachable.

- The Golang driver was also outdated and did not support new features.

Faced with these challenges, we decided to upgrade all our Kafka clusters.

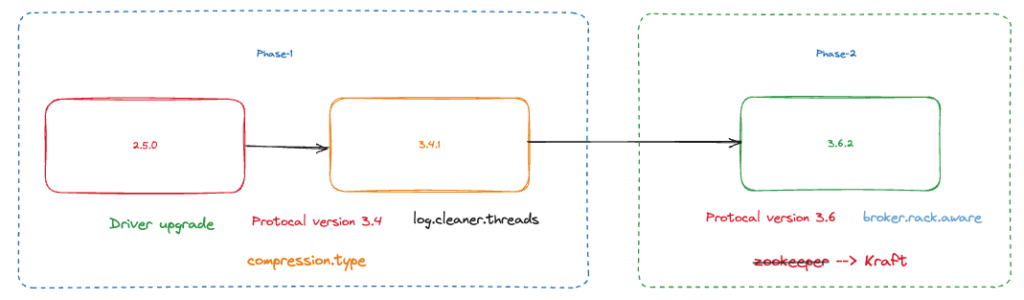

Two-Step Kafka Upgrade Process

Upgrading from older versions of Kafka to the latest releases often requires a two-step process to ensure compatibility and stability. Two rolling bounces are necessary if you are upgrading from versions before 3.6.2, including 3.4 and below. During the first rolling bounce, upgrade to 3.4. Then, in the second rolling bounce, upgrade to 3.6. This two-step process is important for safely transitioning through significant version changes, maintaining system integrity, and avoiding disruptions.

Important Steps :

Upgrade brokers one at a time.

Ensure that the total preferred partition leaders do not drop below 70%.

Keep the rack layout as per the zone/datacenter.

Upgrade the controller after upgrading all brokers.

Our Infrastructure: Scale and Complexity

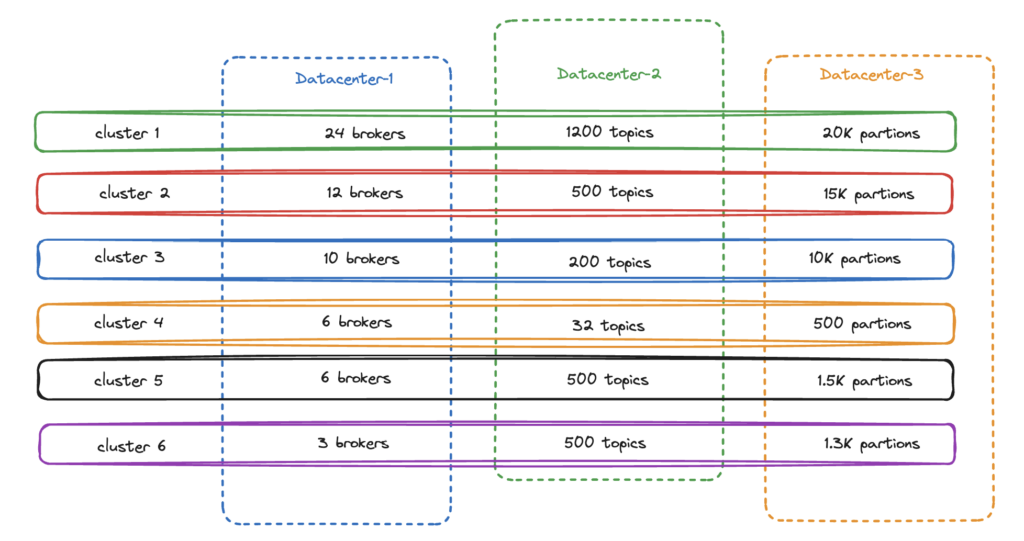

Clusters

At Brevo, we have a strong infrastructure with six clusters. Our operations are large-scale, so we need to plan upgrades carefully. Here are the sizes of our clusters:

We have six clusters in our system, each with different sizes and workloads. We oversee over 3000 topics and more than 40,000 partitions across these clusters. Upgrading our complex and widely used system was a big task, but we did it with a steady and organized approach.

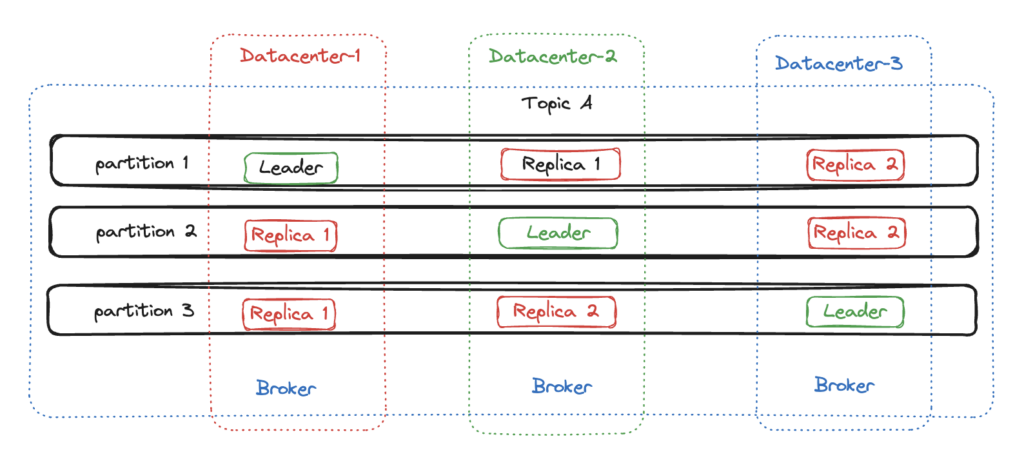

Topics

All three data centers are used to spread each topic partition for better resilience in case of failures, achieved through rack awareness settings and a minimum replication factor of 3 to evenly distribute replicas. With these rack settings, well-distributed topics are ensured by Kafka.

default.replication.factor = 3As an example, we configured the following setting in the Kafka server.properties file for the brokers in Datacenter-1.

broker.rack = "Datacenter-1"

We smoothly upgraded from Kafka 2.5.0 to 3.6.2, showing our careful planning and flawless execution.

Key Steps in the Kafka Upgrade Process

We followed two major steps to upgrade smoothly without any downtime or service disruption.

Upgrade to 3.4.1:

- Upgrade the driver and ensure that it supports backward compatibility.

- Update the initial configuration:

- Set

inter.broker.protocol.versionto2.5.0to maintain compatibility with older clients. - Adjust

log.cleaner.threadsbased on available resources for optimized performance. - Define

compression.typeas one of the following: ‘gzip’, ‘snappy’, ‘lz4’, ‘zstd’.

- Set

- Update the Kafka binary files on the server and then restart it.

- Repeat steps 2 and 3 for each remaining server individually.

- Finally, update

inter.broker.protocol.versionto3.4.1in server.properties and restart the Kafka Service.

Upgrade to 3.6.2:

- Update the Kafka files on the server and restart one broker at a time.

- Finally, set

inter.broker.protocol.versionto3.6.2in server.properties and restart the Kafka Service on the broker.

The shell script will update the binary on the server and restart it. Still, you must update the other settings.

- Replace the Kafka version in the script for which you want to update.

#!/bin/bash

# Update kafka version to be upgraded

NEW_KAFKA_VERSION="3.6.2"

BACKUP_DATE=$(date +"%Y%m%d")

# Download the specified Kafka version

wget "https://archive.apache.org/dist/kafka/$NEW_KAFKA_VERSION/kafka_2.13-$NEW_KAFKA_VERSION.tgz"

# Extract the downloaded archive

tar -xzf kafka_2.13-$NEW_KAFKA_VERSION.tgz

# Create a backup directory for Kafka

mkdir -p /etc/kafka_bkup_$BACKUP_DATE

# Backup the current Kafka installation

mv /etc/kafka/* /etc/kafka_bkup_$BACKUP_DATE

# Stop the Kafka service

systemctl stop kafka

# Move the new version's files to the Kafka directory

mv kafka_2.13-$NEW_KAFKA_VERSION/bin /etc/kafka/

mv kafka_2.13-$NEW_KAFKA_VERSION/config /etc/kafka/

mv kafka_2.13-$NEW_KAFKA_VERSION/libs /etc/kafka/

mv kafka_2.13-$NEW_KAFKA_VERSION/NOTICE /etc/kafka/

mv kafka_2.13-$NEW_KAFKA_VERSION/site-docs /etc/kafka/

mv kafka_2.13-$NEW_KAFKA_VERSION/LICENSE /etc/kafka/

mv kafka_2.13-$NEW_KAFKA_VERSION/licenses /etc/kafka/

# Restart the Kafka service

systemctl start kafka

# Print the latest Kafka version

echo "Kafka has been upgraded to version:"

/etc/kafka/bin/kafka-topics.sh --version

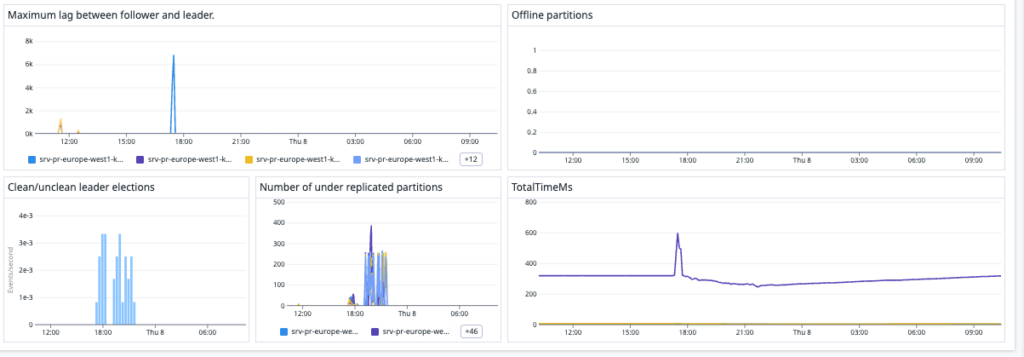

Monitoring

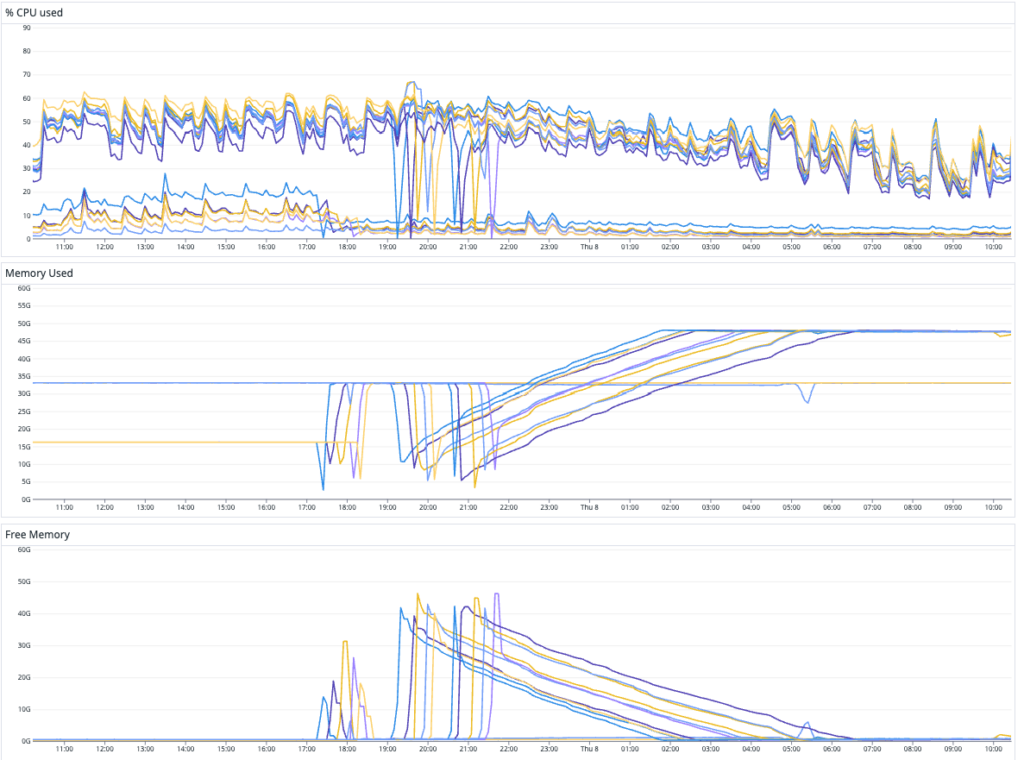

During the upgrade process, we closely monitor several key metrics.

- The lag between Follower and Leader: During the upgrade, you may notice a temporary increase in lag, but it should get better once the data is synced. We don’t expect the lag to stick around.

- Offline Partitions: There should be no offline partitions. If there are, it means a partition is down.

- Under-Replicated Partitions: During the upgrade, there may be a temporary increase in under-replicated partitions, but it should be limited to only one replica per partition.

- CPU Usage: Kafka’s CPU usage should stay within the hardware’s specified limits, with no significant spikes anticipated.

- Memory:

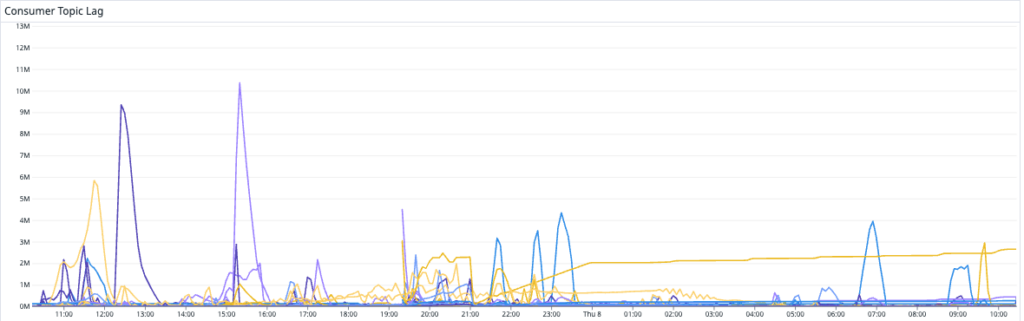

- Consumer Lag: Monitoring consumer lag is key to checking Kafka server health. It should stay stable but may spike occasionally, like when a leader moves. These spikes should return to normal within a few minutes.

Conclusion

We upgraded Kafka from version 2.5.0 to 3.6.2 at Brevo. This was complex due to our large infrastructure and challenges with legacy systems and configurations. However, with careful planning, testing, and execution, we achieved a smooth transition. This success highlights our commitment to using the latest technology and delivering reliable, high-performance systems to our users and stakeholders.