Introduction

Email marketing is an effective way to reach out to customers and promote products or services. With email marketing, it is crucial to track a campaign’s performance and measure its success. One way of doing this would be generating campaign reports.

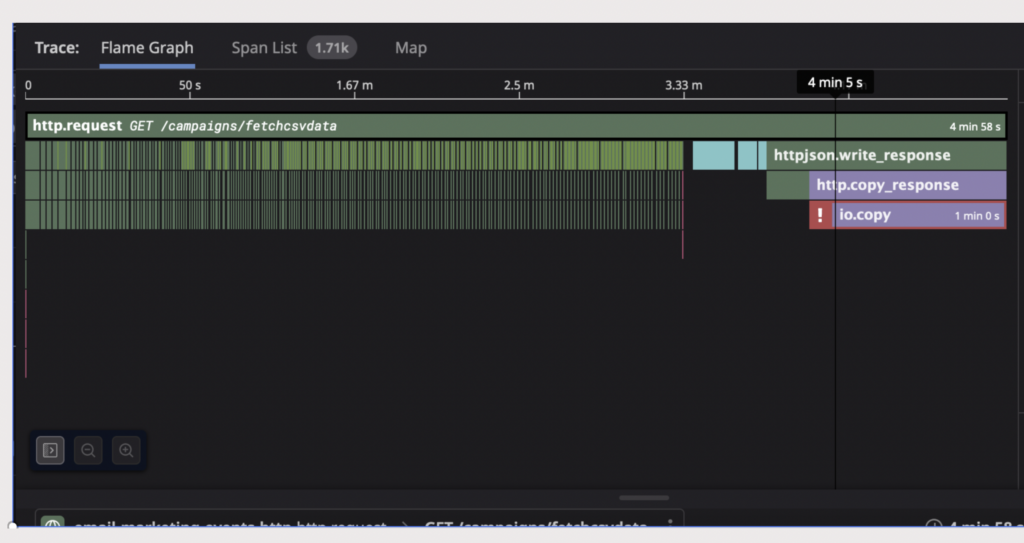

However, when the number of events contained in the report exceeds a certain threshold (~millions), the process of generating the report from ClickHouse becomes inefficient and time-consuming. An HTTP API call to generate the CSV report fails in such cases due to I/O timeout caused by the size of the file.

This problem prompted the need for a solution that eliminates HTTP API calls. Also, we have to transfer all the processing into an asynchronous manner. The proposed solution eventually streamlined the generation of the campaign report CSV, as explained below:

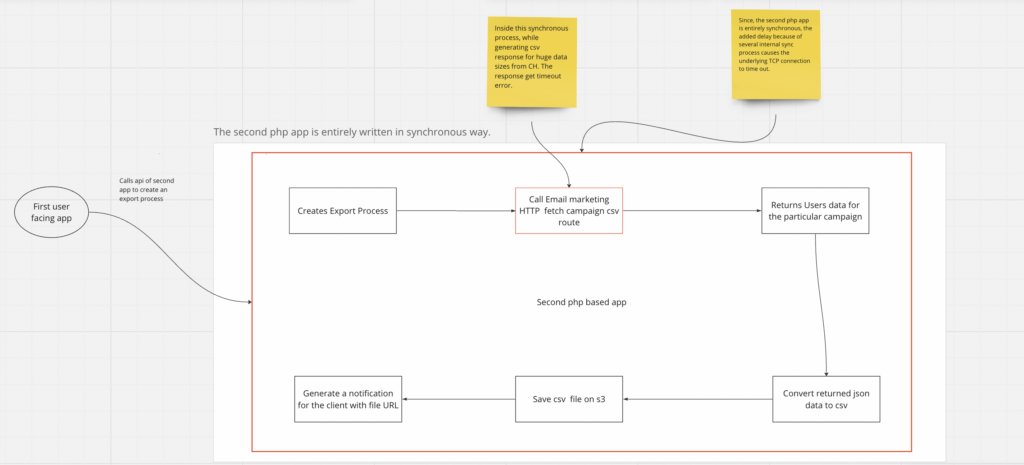

Existing CSV Report Generation Flow

The existing design involves a user requesting to download a CSV report of any sent campaign to a php-based application. This process, in turn, makes a request to another PHP-based second API (hereinafter referred to as “the second php app”). The second php based app was responsible for CSV generation.

This process of generating campaign reports in CSV format was inefficient and time-consuming. So, whenever the number of events exceeds millions of records, it generally breaks. Also, the HTTP API call to generate the CSV report fails in such cases due to I/O timeout.

The diagram below also shows that the entire CSV generation occurs synchronously.

Failure Points

- When data size is huge (more than a million rows in CSV), the process of retrieving data takes up most of the time.

- As data in click-house is accessed via materialized view. We have to be careful in accessing the data, as it would increase the load on clickhouse servers when trying to process more data in a single query.

- A large amount of time taken in fetching and processing of the data causes the underlying TCP connection with the client to Timeout.

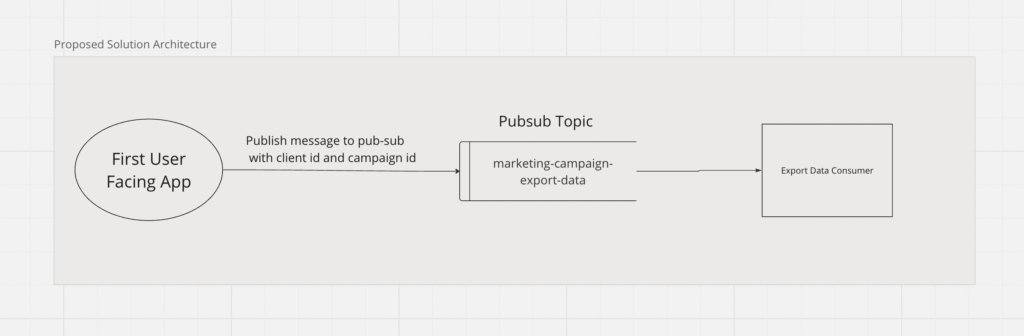

Proposed Solution

The proposed solution aimed to remove the dependency from the second php app from the export CSV process. The first user facing app will contain the API responsible for initiating the export process. Instead of making an API call, a message will be published on Pub-Sub. Pub/Sub is an asynchronous and scalable messaging service that decouples services producing messages from services processing those messages.

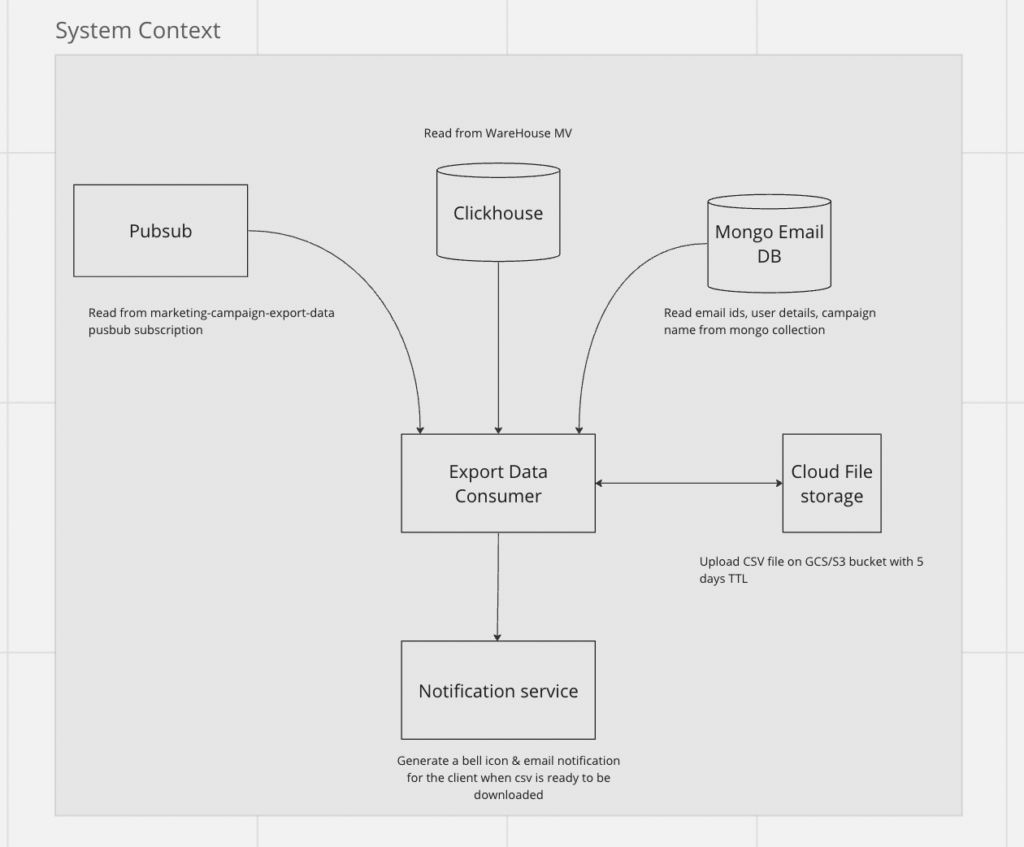

A new app written in go-lang Export Data Consumer consumes the Pub/Sub topic, generates the CSV file, and uploads the data to a GCS or S3 bucket with a TTL of 5-6 days. Finally, the notification API notifies the client when the export process completes, providing the generated file URL.

The proposed solution eliminates the inefficiency and time-consuming process of generating campaign reports in CSV format.

Streamlining CSV Report Generation – Design and Implementation

Design

The design we have is simple and involves removing the second php based app involvement from the export CSV process. The first user facing app contains the API responsible for initiating the export process. Instead of making an API call to the second php based app, we will be directly publishing a message on Pub/Sub.

A new app, Export Data Consumer, is created, which will consume the Pub/Sub topic written in go-lang for its high efficiency. Its sole responsibility is generating the CSV and uploading the data to a GCS Bucket or an AWS S3 bucket (with a TTL of 5-6 days).

Implementation:

The Export Data consumer has two handlers corresponding to the two steps in the process. The first handler retrieves all event data for the campaign ID from a ClickHouse Database. This will be in batches of 10K each. The second handler will read the intermediate ClickHouse Database data, and fetch contextual data from MongoDB (user emails + campaign name). Following that, it merges this data with the ClickHouse Database events data. After which it converts merged data to CSV format. Once the conversion is done we upload the CSV to a GCS bucket/S3 bucket. The end user is then notified via our notification service.

So it will have the following steps:

- Data Fetching: Since we have billions of data in our warehouse, and in order not to add additional latency and high CPU consumptions in I/O operations. We are fetching event data from our warehouse in batches of 10K. As this is a materialized view, it consumes more memory and we have to be careful in choosing the batch size.

- Parser: After fetching the events data from ClickHouse, we retrieve data from other databases containing user and campaign information, such as schedule time and date. We then parse all this data to convert it into a readable CSV format.

- CSV Creations and Notification: The CSV file is generated and uploaded to cloud storage which can easily be downloaded via the shared, secure link. The link has a TTL for 5 days and is notified via a notification system (email and web notification).

By creating a new app Export Data Consumer, this solution eliminates the inefficiency and time-consuming process of generating campaign reports in CSV format. By removing the second php based app involvement from the export CSV process, and directly publishing a message on Pub/Sub, the solution makes the process more efficient and faster.

Conclusion

The solution Streamlining CSV Report Generation ameliorates the process by eliminating HTTP API calls and transferring all the processing into an asynchronous consumer.

This solution is efficient and time-saving, making it easy to export CSV reports of any campaign sent. By ensuring that the solution can handle a large number of events contained in the report without causing an I/O timeout, we can ensure that it is working as intended.

And since we are using a feature toggle, we can facilitate testing and rollout. In addition, by monitoring the new application’s error rate and Pub/Sub subscription message count, we can ensure that the solution is working as intended and handle any errors that may occur.

In conclusion, it eliminates the inefficiency and time-consuming process of generating campaign reports in CSV format and makes the process more efficient and faster. By following the testing and rollout recommendations, we can ensure that the solution is working as intended.

Result

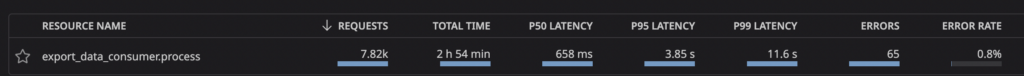

The P95 latency has been reduced to less than 4 sec from 10-12 sec previously, with an error percentage of less than 1%.

The system processes, generates, and downloads a file size of 482 MB containing over 1.2M rows in less than 3 minutes. Thus, eliminating the I/O timeout errors previously encountered, and Streamlining CSV Report Generation.