JavaScript is a single-threaded programming language. It cannot run operations in parallel in javascript. To overcome this issue we can use the worker threads module of Node.js which provides us the ability to spawn more threads in our Node.js application.

At Brevo sometimes our application endpoints expect large payloads that need to be parsed using JSON.parse(), which blocks the event loop.

Using the worker threads module of Node.js

According to the official documentation

Workers (threads) are useful for performing CPU-intensive JavaScript operations. They do not help much with I/O-intensive work. The Node.js built-in asynchronous I/O operations are more efficient than Workers can be.

By utilizing the worker_threads module, we have the capability to offload our CPU-intensive parsing task to a separate thread. This prevents it from blocking the main event loop. We are using worker threads to parse payloads that are greater than a certain size limit.

Since the release of Node.js v10.5.0, there is a new worker_threads module available, which has been stable since Node.js v12 LTS.

- It offers APIs for handling CPU-intensive tasks.

- Moves the CPU-intensive workload to a separate thread.

- It allows the main thread to remain available for new requests.

- Each worker thread operates as an independent entity with its own event loop.

This will allow the main thread’s event loop to remain free for additional incoming requests. The separation of tasks between the main thread and worker threads not only helps to achieve better concurrency. But it also allows us to utilize the available system resources more effectively.

To utilize it, we can implement the following approach:

Create two files to start

index.js

const { Worker, isMainThread } = require('worker_threads');

if (isMainThread) {

const worker = new Worker('worker.js', {

workerData: dataToPassToNewThread

});

worker.on('message', (data) => {

});

worker.on('error', (err) => {

});

worker.on('exit', (code) => {

});

}worker.js

const { isMainThread, workerData, parentPort } = require('worker_threads');

if (!isMainThread) {

// do CPU intensive task

// Data sent from main thread is available in workerData

parentPort.postMessage(data);

}

In the above examples

const { Worker, isMainThread } = require('worker_threads');is used to load theworker_threadsmoduleisMainThreadreturns boolean values denoting if we are on the main thread of our application or notWorkerclass represents an independent JavaScript execution threadnew Worker('worker.js')` This represents the path to the Worker’s main script or module.workerDataoption allows us to pass any value to the worker we createdworker.on('message')The'message'event is emitted for any incoming message from the worker threadworker.on('error')The'error'event is emitted if the worker thread throws an uncaught exception.worker.on('exit')The'exit'event is emitted once the worker has stopped.parentPortis the communication channel that allows the thread spawned to communicate back with the main thread (Parent thread)parentPort.postMessage(data)is used to send back the data to the main thread, and messages are sent usingparentPort.postMessage()will be available in the parent thread usingworker.on('message')

We were able to overcome latency issues arising from heavy-payload parsing with the use of worker threads.

Problem statement

Some of our public routes expect huge payloads. While monitoring our application, we noticed an increase in time to parse payloads. The Tr (Time to response) of these requests that experience delays has increased. We had to reduce this Tr.

Blocked event loop example

This example demonstrates how the application’s event loop becomes blocked.

- First, we need to initialize the npm project with

npm init -y - Install express dependency with

npm i express - Create an

app.jsfile with the following code

const express = require('express')

const app = express()

const port = 3000

app.get('/', (req, res) => {

let n = 10000000000;

let fac = 1;

for (let i = 1; i <= n; i++) {

fac = fac*i;

}

res.json({factorial: fac})

})

app.get('/hello', (req, res) => {

res.send('Hello')

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})

Start the express application, then hit the endpoint http://localhost:3000 from the postman. The code inside app.js is calculating the factorial of a large number. We can expect it to give a response in around 10 or 15 seconds.

While waiting, open another tab and enter the endpoint http://localhost:3000/hello.

The expected answer is simple, but it won’t be displayed before the factorial calculation is done.

Overcoming this problem with the Worker Threads module

This example will demonstrate how to run blocking operations in a separate thread while keeping the main thread free to process new requests.

- Initialize the npm project with

npm init -y. - Install express dependency with

npm i express - Create an

app.jsfile with the following code

const express = require('express')

const { Worker } = require('worker_threads')

const app = express()

const port = 3000

app.get('/', (req, res) => {

const worker = new Worker("./Worker.js");

worker.once("message", result => {

res.send('Completed')

});

worker.on("error", error => {

console.log(error);

});

worker.on("exit", exitCode => {

console.log(`It exited with code ${exitCode}`);

})

})

app.get('/hello', (req, res) => {

res.send('Hello')

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})

Create another file worker.js and add the following code

const {parentPort, workerData} = require("worker_threads");

parentPort.postMessage(runWhileLoop())

function runWhileLoop() {

let n = 10000000000;

let fac = 1;

for (let i = 1; i <= n; i++) {

fac = fac*i;

}

return fac;

}Start the express application, then hit the endpoint http://localhost:3000 from Postman.

The code inside app.js is in nothing more than an infinite loop. We can expect it to give a response in around 10 or 15 seconds. While waiting, open another tab and enter the endpoint http://localhost:3000/hello. In this case, the system promptly displays the answer.

Approach Comparison

In the first example, the application was unable to serve new requests due to the blocked event loop of the main thread.

In comparison, the second example involves offloading the endpoint to a new thread using "const worker = new Worker("./Worker.js");"

This approach allows the new thread to have its own event loop, freeing up the event loop of the main thread,

It allows the application to process any new incoming requests, even while it is handling existing requests.

Performance Metrics

We implemented this module in one of our most used endpoints. As a result, while monitoring, we observed a significant drop in the p95 latency.

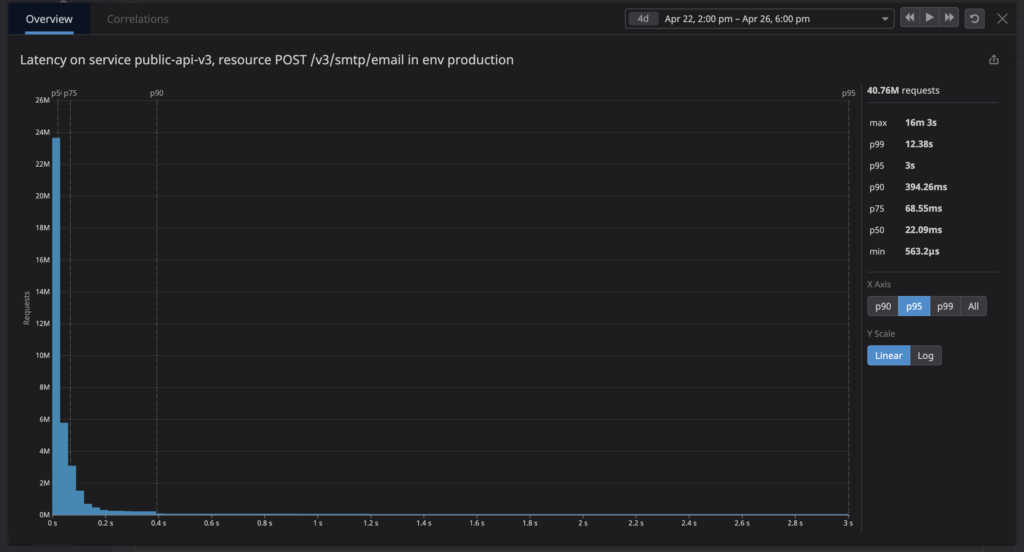

Pre-release

Before the release, the p95 was around 3s.

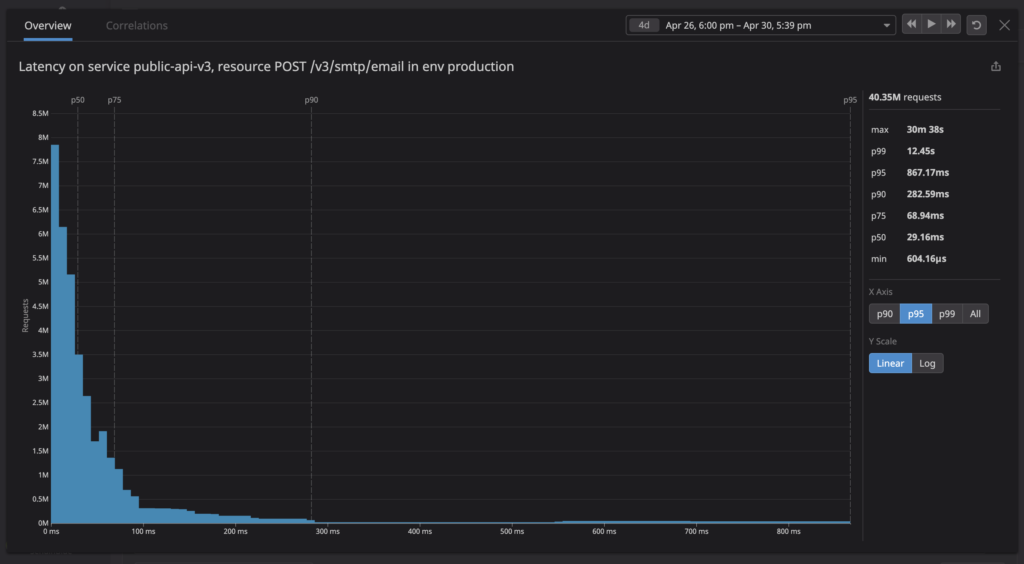

Post-release

After the release, the p95 dropped to around 800ms.